Integrate Ollama with Jan

Quick Introduction

With Ollama, you can run large language models locally. In this guide, we will show you how to integrate and use your current models on Ollama with Jan using 2 methods. The first method is integrating Ollama server with Jan UI. The second method is migrating your downloaded model from Ollama to Jan. We will use the llama2 model as an example.

Steps to Integrate Ollama Server with Jan UI

1. Start the Ollama Server

- Select the model you want to use from the Ollama library.

- Run your model by using the following command:

ollama run <model-name>

- According to the Ollama documentation on OpenAI compatibility, you can use the

http://localhost:11434/v1/chat/completionsendpoint to interact with the Ollama server. Thus, modify theopenai.jsonfile in the~/jan/enginesfolder to include the full URL of the Ollama server.

~/jan/engines/openai.json

{

"full_url": "http://localhost:11434/v1/chat/completions"

}

2. Modify a Model JSON

- Navigate to the

~/jan/modelsfolder. - Create a folder named

<ollam-modelname>, for example,lmstudio-phi-2. - Create a

model.jsonfile inside the folder including the following configurations:

- Set the

idproperty to the model name as Ollama model name. - Set the

formatproperty toapi. - Set the

engineproperty toopenai. - Set the

stateproperty toready.

~/jan/models/llama2/model.json

{

"sources": [

{

"filename": "llama2",

"url": "https://ollama.com/library/llama2"

}

],

"id": "llama2",

"object": "model",

"name": "Ollama - Llama2",

"version": "1.0",

"description": "Llama 2 is a collection of foundation language models ranging from 7B to 70B parameters.",

"format": "api",

"settings": {},

"parameters": {},

"metadata": {

"author": "Meta",

"tags": ["General", "Big Context Length"]

},

"engine": "openai"

}

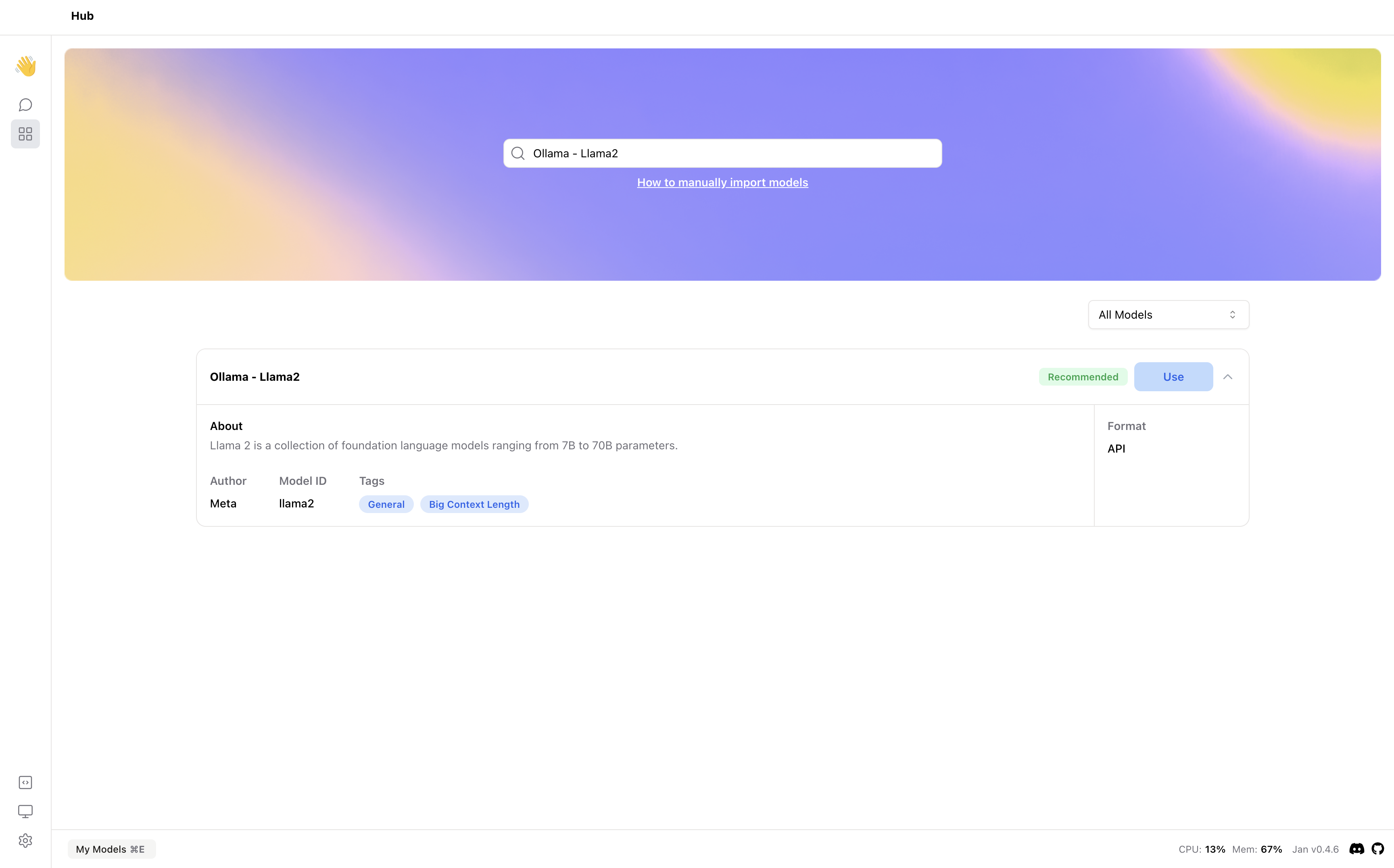

3. Start the Model

- Restart Jan and navigate to the Hub.

- Locate your model and click the Use button.

4. Try Out the Integration of Jan and Ollama